A web crawler tool emulates search engine bots. Web crawlers are indispensable for search engine optimization. But leading crawlers are so comprehensive that their findings — lists of URLs and the various statuses and metrics of each — can be overwhelming.

For example, a crawler can show (for each page):

- Number of internal links,

- Number of outbound links,

- HTTP status code,

- A noindex meta tag or robots.txt directive,

- Amount of non-linked text,

- Number of organic search clicks the page generated (if the crawler is connected to Search Console or Google Analytics),

- Download speed.

Crawlers can also group and segment pages based on any number of filters, such as a certain word in a URL or title tag.

There are many quality SEO crawlers, each with a unique focus. My favorites are Screaming Frog and JetOctopus.

Screaming Frog is a desktop app. It offers a limited free version for sites with 500 or fewer pages. Otherwise, the cost is approximately $200 per year. JetOctopus is browser-based. It offers a free trial and costs $160 per month. I use JetOctopus for larger sophisticated sites and Screaming Frog’s free version for smaller sites.

Regardless, here are the top six SEO issues I look for when crawling a site.

Using Web Crawlers for SEO

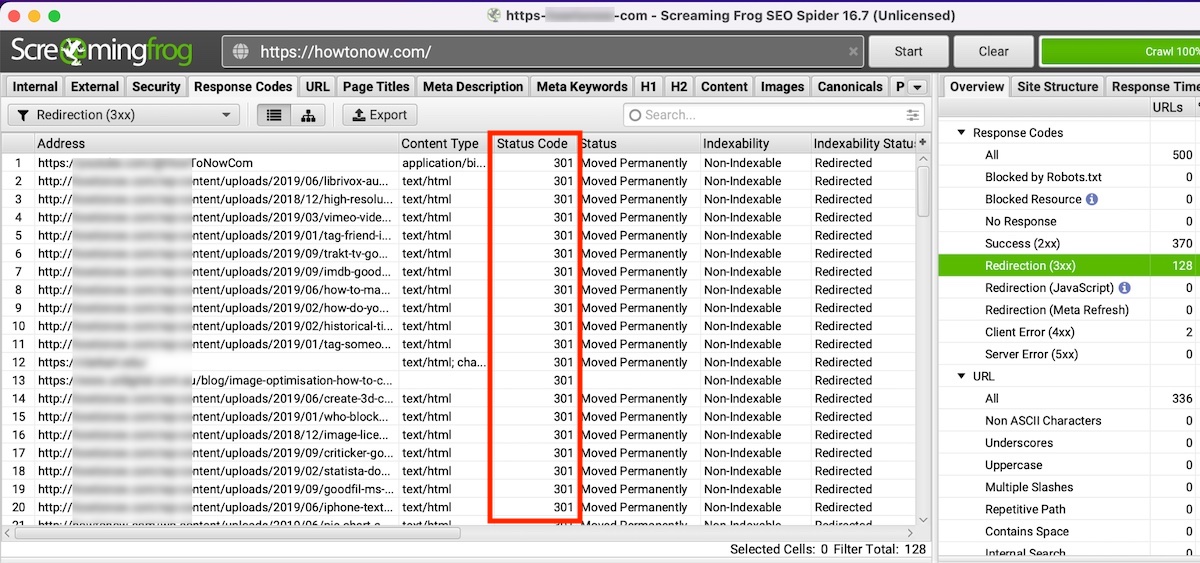

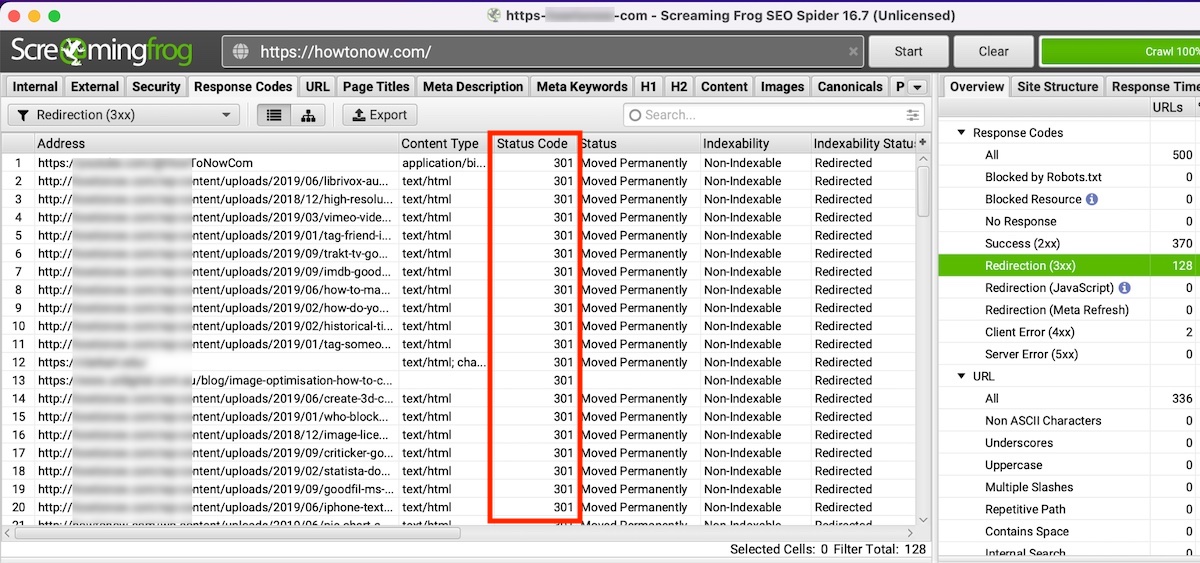

Error pages and redirects. The first and main reason for crawling a site is to fix all errors (broken links, missing elements) and redirects. Any crawler will give you quick access to those errors and redirects, allowing you to fix each of them.

Most people focus on fixing broken links and neglect redirects, but I recommend fixing both. Internal redirects slow down the servers and leak link equity.

Screaming Frog provides a list of all URLs returning a 301(redirect) status code — moved permanently. Click image to enlarge.

—

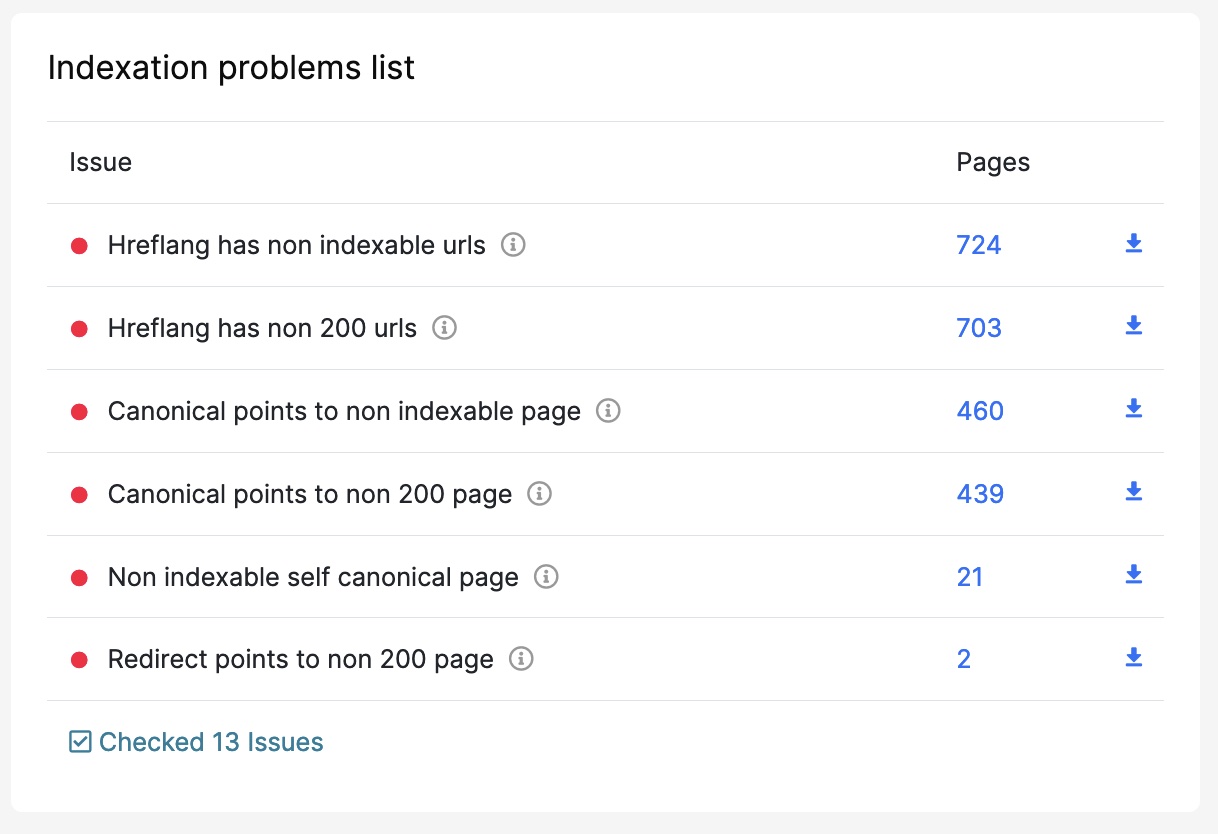

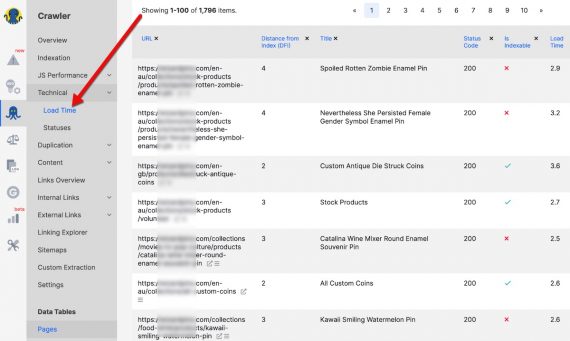

Pages that cannot be indexed or crawled. The next step is to check for accidental blocking of search crawlers. Screaming Frog has a single filter for that — pages that cannot be indexed for various reasons, including redirected URLs and pages blocked by the noindex meta tag. JetOctopus has a more in-depth breakdown.

—

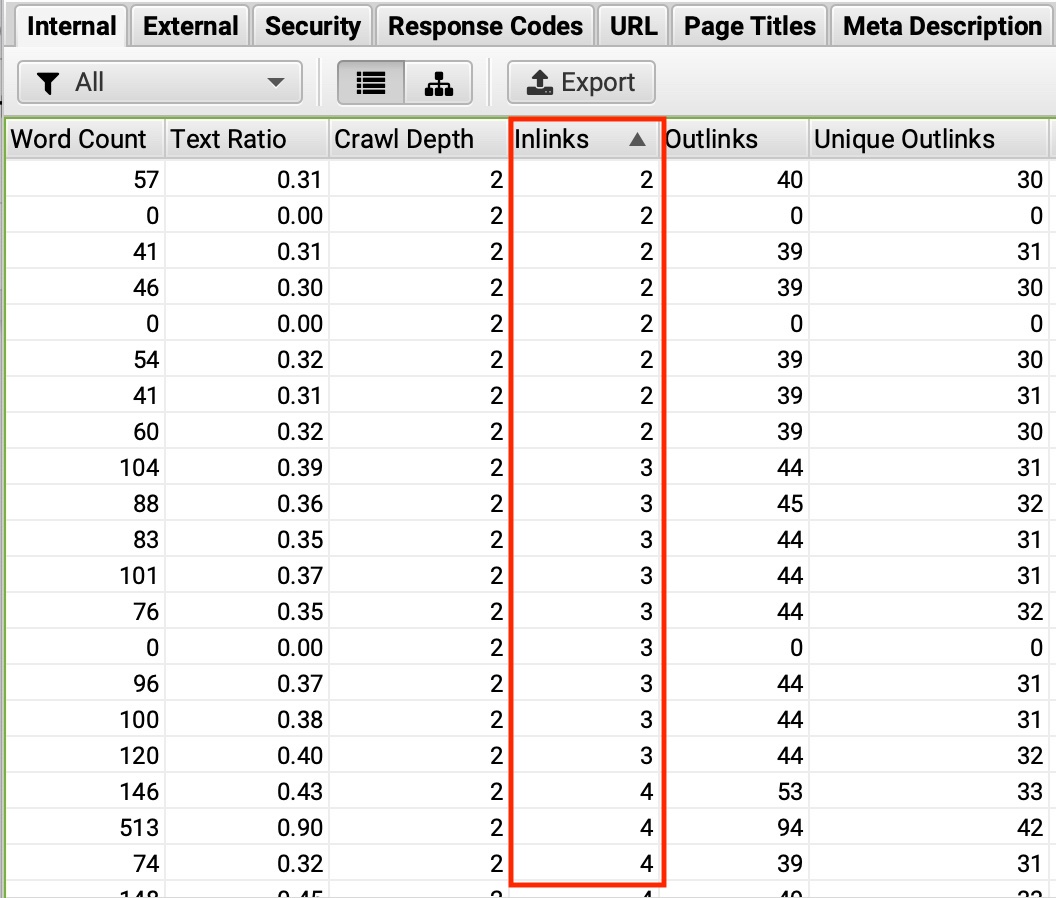

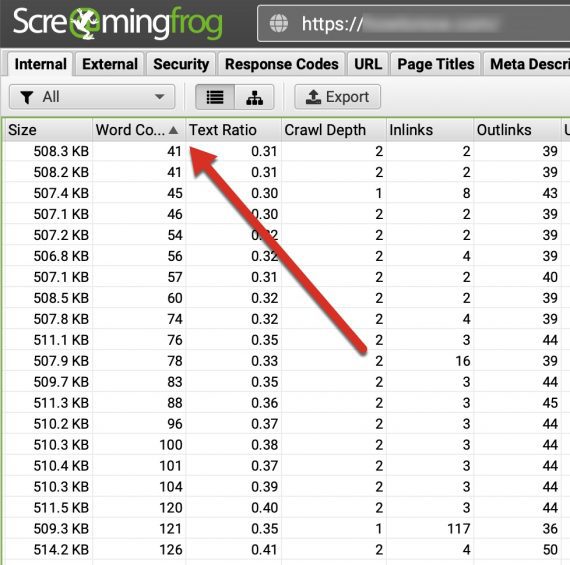

Orphan and near-orphan pages.Orphan and poorly interlinked pages are not an SEO problem unless they should rank. And then, to increase the chances of high rankings, ensure those pages have many internal links. A web crawler can show orphan and near-orphan pages. Just sort the list of URLs by the number of internal backlinks (“Inlinks”).

—

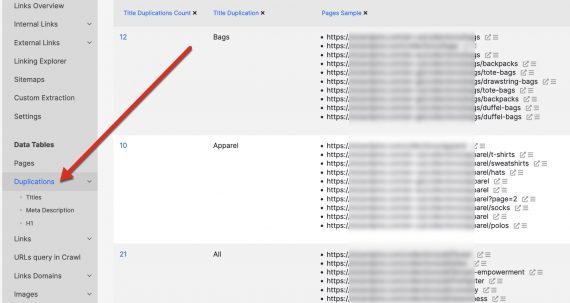

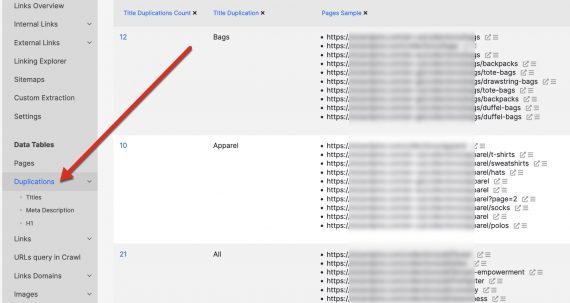

Duplicate content. Eliminating duplicate content prevents splitting link equity. Crawlers can identify pages with the same content as well as identical titles, meta descriptions, and H1 tags.

JetOctopus identifies pages with duplicate titles, meta descriptions, and H1 tags. Click image to enlarge.

—

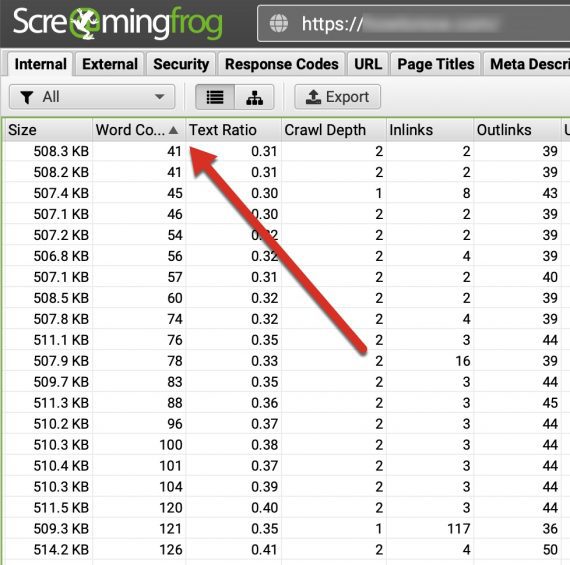

Thin content. Pages with little content are not hurting your rankings unless they are pervasive. Add meaningful text to thin pages you want to rank or, otherwise, noindex them.

Screaming Frog lists the number of words on each page, indicating potential thin content. Click image to enlarge.

—

Slow pages. JetOctopus has a pre-built filter to sort (and export) slow pages. Screaming Frog and most other crawlers have similar capabilities.

Advanced Findings

After addressing the six issues above, focus on:

- Images missing alt texts,

- Broken external links,

- Pages with too short title tags (longer tags proved more ranking opportunities),

- Pages with too few outbound internal links (to improve visitors’ browsing journeys and decrease bounces),

- Pages with missing H1 and H2 HTML headings,

- URLs included in sitemaps but not in internal navigation.

A web crawler tool emulates search engine bots. Web crawlers are indispensable for search engine optimization. But leading crawlers are so comprehensive that their findings — lists of URLs and the various statuses and metrics of each — can be overwhelming.

For example, a crawler can show (for each page):

- Number of internal links,

- Number of outbound links,

- HTTP status code,

- A noindex meta tag or robots.txt directive,

- Amount of non-linked text,

- Number of organic search clicks the page generated (if the crawler is connected to Search Console or Google Analytics),

- Download speed.

Crawlers can also group and segment pages based on any number of filters, such as a certain word in a URL or title tag.

There are many quality SEO crawlers, each with a unique focus. My favorites are Screaming Frog and JetOctopus.

Screaming Frog is a desktop app. It offers a limited free version for sites with 500 or fewer pages. Otherwise, the cost is approximately $200 per year. JetOctopus is browser-based. It offers a free trial and costs $160 per month. I use JetOctopus for larger sophisticated sites and Screaming Frog’s free version for smaller sites.

Regardless, here are the top six SEO issues I look for when crawling a site.

Using Web Crawlers for SEO

Error pages and redirects. The first and main reason for crawling a site is to fix all errors (broken links, missing elements) and redirects. Any crawler will give you quick access to those errors and redirects, allowing you to fix each of them.

Most people focus on fixing broken links and neglect redirects, but I recommend fixing both. Internal redirects slow down the servers and leak link equity.

Screaming Frog provides a list of all URLs returning a 301(redirect) status code — moved permanently. Click image to enlarge.

—

Pages that cannot be indexed or crawled. The next step is to check for accidental blocking of search crawlers. Screaming Frog has a single filter for that — pages that cannot be indexed for various reasons, including redirected URLs and pages blocked by the noindex meta tag. JetOctopus has a more in-depth breakdown.

—

Orphan and near-orphan pages.Orphan and poorly interlinked pages are not an SEO problem unless they should rank. And then, to increase the chances of high rankings, ensure those pages have many internal links. A web crawler can show orphan and near-orphan pages. Just sort the list of URLs by the number of internal backlinks (“Inlinks”).

—

Duplicate content. Eliminating duplicate content prevents splitting link equity. Crawlers can identify pages with the same content as well as identical titles, meta descriptions, and H1 tags.

JetOctopus identifies pages with duplicate titles, meta descriptions, and H1 tags. Click image to enlarge.

—

Thin content. Pages with little content are not hurting your rankings unless they are pervasive. Add meaningful text to thin pages you want to rank or, otherwise, noindex them.

Screaming Frog lists the number of words on each page, indicating potential thin content. Click image to enlarge.

—

Slow pages. JetOctopus has a pre-built filter to sort (and export) slow pages. Screaming Frog and most other crawlers have similar capabilities.

Advanced Findings

After addressing the six issues above, focus on:

- Images missing alt texts,

- Broken external links,

- Pages with too short title tags (longer tags proved more ranking opportunities),

- Pages with too few outbound internal links (to improve visitors’ browsing journeys and decrease bounces),

- Pages with missing H1 and H2 HTML headings,

- URLs included in sitemaps but not in internal navigation.